Publikationen

2024

Dr. Vladimir Manewitsch,

René Schallner,

Leonie Steck

Generative AI in Market Research

AI is increasingly being used in market research. Among other applications, the concept of synthetic respondents is becoming more widespread. But can AI really simulate real people in surveys, and can it also provide reliable insights?

PHOTO: ANDRIY ONUFRIYENKO, GETTY IMAGES

Main Results

- Efficiency with limits: AI offers significant advantages in streamlining market research processes, reducing costs, and providing quick, broad insights, but it lacks the depth and nuance of real consumer feedback.

- Mainstream bias: AI tends to favor well-known brands and mainstream opinions, missing the perspectives of early adopters or niche markets, which can lead to a narrow and potentially misleading view of consumer behavior.

- A supplemental, not a replacement: While AI can provide helpful directional insights, it lacks the precision and diversity needed for actionable consumer insights, making it more suitable as a supplementary tool rather than a replacement for human respondents.

Generative AI is making waves in marketing, especially in market research. From analyzing social media sentiment to coding survey responses, AI has the potential to transform how we understand consumers. But can it truly simulate human insights? And how reliable are these machine-generated responses?

One emerging concept is using "synthetic respondents," where AI answers survey questions instead of real people. While intriguing, this raises concerns: Are the insights accurate enough to base business decisions on? Critically, the risks of bias and unreliable data could negatively impact business strategies.

This research delves into whether AI-generated responses can truly replicate human feedback. The potential is huge, but marketers must be cautious. Understanding the strengths and limitations of generative AI will be critical in deciding if it’s the right tool for deeper consumer insights—or just a shortcut to flawed data.

Method: Comparing Real and AI-Generated Responses

To explore whether AI can simulate human insights, a team of researchers from NIM designed three surveys on diverse topics and compared the responses of 500 real U.S. consumers to those generated by 500 AI-based respondents. These AI respondents were created using OpenAI's GPT-4, set to standard parameters.

We focused on U.S.-based respondents because GPT-4 was trained on large volumes of American data, increasing the likelihood that AI responses would closely mirror those of real people. The surveys covered opinions on soft drinks, sportswear brands, and U.S. political views.

For each survey, we gathered demographic information from the real respondents, then created an AI "digital twin" for each. These digital personas replicated key characteristics—like ethnicity, gender, location, and profession—across 10 demographic variables. For example, if a real respondent was a white male from Michigan, the AI was instructed to match that profile when generating responses.

The AI answered all survey questions as its assigned persona, and to ensure consistency, it was given prior answers to simulate human recall, just as a person would reference their previous thoughts when answering follow-up questions.

Figure 1: Relative importance of factors in purchasing soft drink brands for human and AI-generated respondents.

Study 1: Soft Drink Brand Preferences—How Do AI Responses Compare?

In our first survey, we looked at how AI-generated respondents compared to real consumers when evaluating soft drink brands. The survey covered eight brands: our well-known ones (Coca-Cola, Sprite, Pepsi, and 7-Up) and four lesser-known brands (Dry, Moxie, BlueSkye, and Orangina). We explored key stages of the brand funnel, including awareness, consideration, and purchase behavior.

At first glance, the AI's responses seemed quite valid. For broader questions, such as what factors influence soft drink purchases, the AI aligned closely with real consumers (see Figure 1). One interesting twist: AI turned out to be more health conscious than the average respondent, highlighting an unexpected quirk in its responses.

However, when we dug deeper into brand-specific insights, differences emerged. While the AI matched human respondents in awareness of well-known brands, the AI heavily overestimated the consideration of well-known brands and underestimated the purchase of llesser-known brands (see Figure 2).

When rating qualities like brand image, product superiority, and likelihood to recommend, the AI consistently gave more positive feedback than the humans. This created a noticeable gap in how it evaluated brands, showing less variation in its answers and offering more optimistic views overall.

To objectively compare AI to human respondents, we ran a statistical test to see if the AI's answer distribution matched that of the human sample. We also compared the responses of two different human groups as a baseline. Interestingly, the human samples showed high consistency with each other, while the AI’s answers diverged from human responses on 75% of the questions.

In short, while the AI performed well on general questions, its responses became less reliable when it came to specific consumer behaviors and lesser-known brands, underlining the challenge of using AI to simulate more nuanced insights.

Figure 2: Percentage of human and AI-generated respondents who we are aware of, consided, and purchased well-known and lesser-known soft drink brands.

Study 2: Sportswear Brands—AI’s Preference for Big Names and Missed Nuances

In the sportswear survey, we found similar patterns to the soft drink study. AI and human respondents agreed on general factors for purchase decisions like those regarding price, comfort, and material. However, the AI overemphasized durability and missed factors like customer service.

When it came to specific brands, AI responses aligned with humans only in terms of brand awareness of well-known brands. As in the first study, AI overestimated the consideration of big brands, underestimated the purchase of lesser-known brands, and gave more positive, less varied ratings overall.

Our analysis confirmed significant differences between AI and human responses on 80% of questions, with humans showing much more consistency.

Study 3: U.S. Elections—AI Shows Political Bias

In our political survey on U.S. elections, we compared AI-generated responses to those of 500 real voters, focusing on voting intentions, party opinions, and references for candidates like Donald Trump and Joe Biden (the study was conducted in the summer of 2024). The AI, trained until October 2023, was updated with recent news to reflect current political dynamics.

Interestingly, GPT showed a strong bias toward the Democratic Party, consistently giving more favorable opinions of Joe Biden and the Democrats than human respondents. If given a vote, GPT would have overwhelmingly supported Biden, while human responses were much more divided between Biden and Trump.

When asked about the 2020 election, both the AI and humans reported a majority voting for Biden, consistent with real results. However, GPT overestimated Biden’s voter base and projected much higher voter loyalty for both candidates—predicting 100% of Trump voters and 89% of Biden voters would stay loyal, compared to actual human responses that indicated lower loyalty.

In short, the AI’s political leanings were evident, favoring Democrats and showing less variation than real voters, especially when predicting future voting behavior.

Key Insights

- For marketing professionals, AI offers exciting possibilities, particularly in its ability to streamline processes, reduce costs, and quickly gather large amounts of data. GPT’s responses often seemed credible and coherent, making it useful for generating quick insights and spotting broad trends.

- However, caution is key. While AI can mimic humanlike reasoning, it often produces results that lack the nuance of real consumer feedback. Its tendency to favor well-known brands, show less variability in responses, and inflate positive sentiment can skew results. More critically, AI’s reliance on mainstream opinions creates blind spots, particularly when capturing the perspectives of early adopters and niche markets. These groups often drive innovation and signal emerging trends, making their exclusion a significant limitation. This narrow focus renders AI less reliable when diverse perspectives and precision are crucial, especially in sentiment-sensitive areas or politically charged contexts, as highlighted in our U.S. election survey.

- The biggest challenge is that GPT often missed the complexity of human behavior—its responses tended to be overly consistent and biased toward frequent or positive opinions. Such qualities can lead to an overestimation of certain trends or preferences, which could mislead marketing strategies. For now, AI is best used as a supplementary tool—helpful for directional insights but not yet ready to replace human respondents in generating deep, actionable consumer insights.

- For consumers, AI could lead to more responsive and agile marketing, as companies can quickly adapt their campaigns based on data-driven insights. However, there is a risk that AI-generated data may misinterpret or oversimplify consumer preferences, leading to less personalized or even irrelevant product offerings.

- For society, the use of AI in market research raises ethical considerations. AI’s inherent biases, particularly in politically sensitive areas or social issues, can reinforce stereotypes or marginalize minority viewpoints. This could have broader societal impacts, influencing public opinion or amplifying existing inequalities. More specifically: If we start relying too much on AI, we may not realize that biases or wrong assumptions are entering our decision process. Additionally, as AI becomes more prevalent, it may disrupt traditional job roles in market research, prompting a need for new skills and ethical oversight to ensure that AI’s influence on both markets and society remains fair and unbiased.

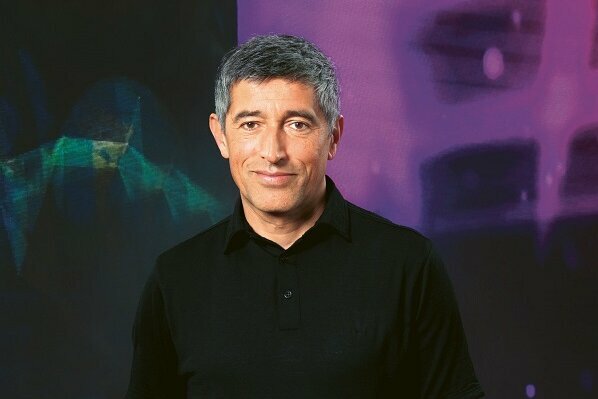

Autorinnen und Autoren

- Dr. Carolin Kaiser, Head of Artificial Intelligence, NIM, carolin.kaiser@nim.org

- Dr. Vladimir Manewitsch, Senior Researcher, NIM, vladimir.manewitsch@nim.org

- René Schallner, Chief Founding Engineer, ZML

- Leonie Steck

Kontakt